The following is a guest post contributed by Dan Wilkerson, marketing manager at LunaMetrics, a Google Analytics Certified Partner & Digital Marketing Consultancy.

For a long time, this has been sort of the status quo. Now, with new features available in Universal Analytics, we have some tools we can employ to combat this problem. In this post, I want to share with you a solution that I've developed to reduce the amount of Direct traffic. We're calling it DirectMonster, and we're really excited to make it open source and available to the Google Analytics community.

A core issue with measuring social media is that due to the way that traffic migrates around the web, there are lots of situations where we lose referrer information and those visits end up being labeled as 'Direct' inside of our analytics.

This can happen for a variety of reasons, but the most common situations where this kind of erroneous attribution occurs are:

- When a user clicks an untagged link inside an email

- When a user visits from a mobile application

- When a user clicks a link shared to them via an instant message

If a visitor has come to your site previously, Google Analytics will simply apply the same referral information it had for their previous visit, which it retrieves from the UTMZ cookie it previously saved on the visitor's browser. But, if there are no cookies, Analytics has no information, and buckets the visitor into Direct.

Obviously, this is problematic; 'Direct' is supposed to represent visitors who bookmark or directly type in our URL. These users are accessing our site through a shared link, and should be counted as referrals. Thankfully, we have some tools at our disposal to combat some of these scenarios, most notably campaign parameters. But campaign parameters only help with links that you share; what about when a visitor comes to your site and shares the link themselves?

These visits can cause serious problems when it comes time to analyze your data. For example, we offer Google Analytics & AdWords training. Most of our attendees are sponsored by their employers. This means they visit our site, scope out our training, and then email a link to a procurement officer, who clicks through and makes the purchase. Since the procurement officer comes through on the emailed link and has never visited our site, the conversion gets bucketed into 'Direct / None' and we lose all of the visit data for the employee who was interested in the first place. This can compound into a sort of feedback loop - the only data we see would be for individuals who buy their own tickets, meaning we might optimize our marketing for smaller businesses that send us less attendees. In other words, we'd be interpreting data from the wrong customers. Imagine how this kind of feedback loop might impact a B2B trying to generate enterprise-level leads - since they'd only see information on the small fry, they could wind up driving more of the wrong kind of lead to their sales team, and less of the right kind.

For a long time, this has been sort of the status quo. Now, with new features available in Universal Analytics, we have some tools we can employ to combat this problem. In this post, I want to share with you a solution that I've developed to reduce the amount of Direct traffic. We're calling it DirectMonster, and we're really excited to make it open source and available to the Google Analytics community.

What is DirectMonster?

DirectMonster is a JavaScript plug-in for Google Analytics that appends a visitor's referral information as ciphered campaign parameters as an anchor of the current URL. The result looks something like this:

When the visitor copies and shares the URL from the toolbar, they copy that stored referral information along with it. When someone without referral information lands on the site through a link with those encoded parameters, the script decodes that information as campaign parameters to pass along to Google Analytics, waits until Analytics writes a fresh UTMZ cookie, and then ciphers, encodes, and re-appends the visitors current referral information. It also appends '-slb' to the utm_content parameter. That way, those visits can be segmented from 'canonical' referrals for later analysis, if necessary. The visitor who would have had no referral information now is credited as being referred from the same source as the visitor who shared the link with them. This means that visits that normally would have been erroneously segmented as 'Direct / None' will now more accurately reflect the channel that deserves credit for the visit.

At first, this might seem wrong - shouldn't we just let Analytics do its job and not interfere? But, the fact is that those visits aren't really Direct, at least not in its truest interpretation, and having 'assisted referrer' channel information gives you actionable insight. Plus, by weeding out those non-Direct scenarios, your Direct / None numbers will start to more accurately represent visitors who come to your site directly, which can be very important for other measurement and attribution. It's actually better all the way around. After all, if a Facebook share is what ultimately drove that visitor to your site, isn't having that information more valuable than having nothing at all? This way, you'll have last-click attribution for conversions that otherwise would have simply been bucketed as Direct. Of course, you won't have the visit history of the assisting referrer, but... well, more on that soon.

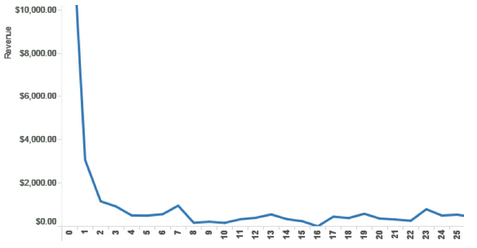

We've been fine-tuning this on our site for the past few months, and we've been able to greatly enhance our conversion attribution accuracy. In our video case study, I mentioned that we enhanced attribution by 47.5%; since that time, we've seen the accuracy of our data continue to climb; whereas before, we were seeing 'Direct / None' account for 45.5% of our conversions, it now accounts for just 20.6% - a decrease of 54.7%. Better yet, look at what it's done to all of our traffic:

We've gone from having about 20-25% of our traffic come in 'Direct / None' to just under 15%, and I anticipate that number will continue to fall.

DirectMonster and Universal Analytics

One of the coolest features that Universal Analytics has given us is Custom Dimensions. If you're not familiar with them, take a minute and read the Google Developer Resources page about what they are and how they work. Although initially designed for the asynchronous code, Universal Analytics has allowed us to put DirectMonster on steriods.

In our Universal implementation, we store the visitors CID as a visit-level custom dimension, and we add their CID to the hashed parameters we're already storing in the anchor of their URL.

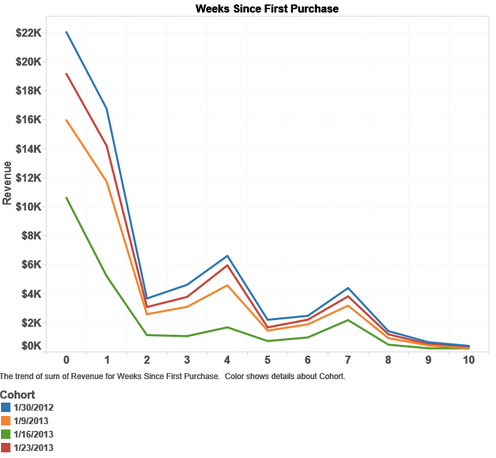

When a visitor comes through on a link with a CID that differs from their own, we capture the stored CID as the Assisted Referrer. Then, we can open up our Custom Reports later on and view what visitors were referred to our site by whom, and what they did when they got there.

What does this mean? If a celebrity tweets a link to your product, you can discover exactly how many visitors they referred, and how much revenue those visitors generated.

By cross-referencing the Assisted CID for single-visit 'Direct / None' purchases, you can discover the true visit history of a conversion.

Since it takes advantage of advanced Universal Analytics functionality, DirectMonster 2.0 requires some advanced implementation as well. Unlike its cousin, you'll need to adjust your Analytics tracking code to include a few functions, and you'll need to configure the Custom Dimensions you'll be storing a visitors CID and assisted referrers CID inside of. For a full reference on how to get either version of DirectMonster and configure it for your site, check out our blog post covering the topic in detail here or visit our GitHub page and get DirectMonster for yourself.

I hope that you're as excited as I am about this development and all of the things Universal Analytics is enabling us to do. Think of a use case I didn't mention? Share it with me in the comments!

Posted by Dan Wilkerson, marketing manager at LunaMetrics