There are many ways to measure the effectiveness of organic search engine marketing. We’d like to explore various techniques in a series of posts here on the Analytics blog. Today we’ll talk about understanding organic using landing pages and Webmaster Tools data.

Today, almost all marketers are investing heavily in creating high-quality content as a way to reach users with information about their products and services. The content can take many forms - from product specific content to brand specific content. The intent is to generate traffic and conversions from a variety of sources, one of the largest of which is often search.

One way to measure the effectiveness of content is to analyze its performance as a landing page. A landing page is the first page a user sees when they land on your site. If it’s great content, and if it’s ranked highly by search engines like Google, then you should see a lot of websites ‘entrances’ via that page. Looking at landing page performance, and the traffic that flows through specific landing pages, is a great way to analyze your search engine optimization efforts.

Begin by downloading this custom report (this link will take you to your Analytics account). This report shows the landing pages that receive traffic from Google organic search and how well the traffic performs.

Let’s start at the top. The over-time graph shows the trend of Google organic traffic for your active date range. If you are creating great content that is linked to and shared then you should see the trend increasing over time.

When you look at this data ask yourself the question: how well does the trend align with my time investment? Looking at the data below we see that the organic traffic is increasing, so this organization must be working hard to create and share good content.

|

| Organic traffic is steadily increasing for this site. An important question to ask is, “how does this align with my search optimization efforts?” |

The table, under the trend data, contains detailed data about the acquisition of users, their behavior on the site and ultimately the conversions that they generate. This includes data like Visits, % New Visits, Bounce Rate, Average Time on Site, Goal Conversion Rate, Revenue and Per Visit Value.

|

| Using the tabular data I can learn how search engine traffic, entering through a specific page is performing. |

Each metric provides insight about users coming from organic search and entering through certain pages. For example, % New Visits can help you understand if you’re attracting a new audience or a lot of repeat users. Bounce rate can help you understand if your content is ‘sticky’ and interesting to users. And conversion rate helps you understand if organic traffic, flowing through these landing pages, is actually converting and driving value to your business.

Again, we’re using the landing page to understand the performance of our content in search engine results.

Remember, make sure that you customize the report to include goals that are specific to your account. You can learn more about goals and conversions in our help center.

Another very useful organic analysis technique is to group your content together by ‘theme’ and analyze the performance. For example, if you are an ecommerce company you may want to group all of your pages for a certain product category together - like cameras, laptop computers or mobile phones.

You can use the Unified Segmentation tool to bundle content together. For example, here’s a simple segment that includes two branded pages (I’m categorizing the homepage and the blog page homepage as ‘brand’ pages).

You can create other segments that include other types of pages, like specific category pages (and then view both segments together). Here is the Acquisition > Keywords > Organic report with both segments applied. This helps me get a bit more insight into the types of pages people land on when visiting from Google organic search results.

|

| Plotting two segments, one for branded content landing pages and one for non-branded landing pages, can help you understand your specific tactics. |

Regardless of the tool you use, the analysis technique is the same: look at the performance of each landing page to identify if they are generating value for your business. And don’t forget, the best context for this data is your search engine marketing plan.

Here’s one final tip when analyzing organic traffic. Whenever you create a customization in Google Analytics, like a segment or custom report, don’t use the keyword dimension. Instead use the Source and Medium dimensions. Set the Source to ‘Google’ and Medium of ‘Organic’. It provides the most consistent data over long time periods.

In addition to using Google Analytics, you can also use the data from Webmaster Tools to gain an understanding of your search marketing tactics. You can link your Google Analytics account and your Webmaster Tools account to access some of this data directly in Google Analytics. If you’re not familiar with Webmaster Tools, check out their help center for an overview or this awesome video.

In general the Webmaster Tools data will help you understand how well your content is crawled, indexed and ranked by Google. This is extremely tactical data that can inform many search marketing decisions, like which content to create, how to structure your content and how to design your pages. The reports are in the Acquisition > Search Engine Optimization section.

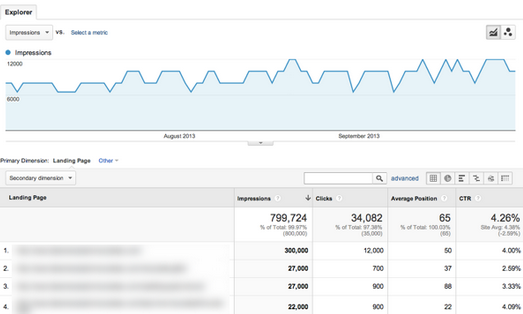

Let’s start by viewing some data using the Acquisition > Search Engine Optimization > Landing Pages report.

|

| Webmaster Tools data is available directly in Google Analytics. You can view the data based on landing page or search query. |

Let’s review a couple of metrics that are unique to Webmaster tools: Impressions, Average Position and Click Through Rate. Impressions is the number of times pages from your site appeared in search results. If you’re continuously optimizing the content on your site you should see your content move up in the search results and thus get more impressions.

Average position is the average top position for a given page. To calculate average position, Webmaster Tools take into account the top ranking URL from your site for a particular query. For example, if Alden’s query returns your site as the #1 and #2 result, and Gary’s query returns your site in positions #2 and #7, your average top position would be 1.5 [ (1 + 2) / 2 ].

Click Through Rate (CTR) is the percentage of impressions that resulted in a click and visit to your site. Again, you can see both the impressions and the CTR for every landing page on your site.

If we’re optimizing content then hopefully we should see our average position increase, the impressions increase and ultimately an increase in click-throughs. A very easy way to observe this behavior is by applying a date comparison to the Acquisition > Search Engine Optimization > Landing Pages report.

|

Use the Search Engine Optimization > Landing Pages report to understand if your content is getting ranked higher and generating clicks. |

What happens if impressions and average position are increasing but you’re not getting clicks? You’re getting ranked better, but what is listed in the results may not get a response from the user.

There are lots of ways to optimize your content and change what is listed in the search results. You could adjust your page title or meta description to improve the data that is shown to the user and thus increase the relevancy of the result and your Click Through Rate.

We’ll be back soon with another article on measuring and optimizing organic search traffic with Analytics.

Posted by Justin Cutroni, on behalf of the Google Analytics Education team